|

MAIN PAGE

> Back to contents

Security Issues

Reference:

Nikitin P.V., Gorokhova R.I., Bakhtina E.Y., Dolgov V.I., Korovin D.I.

Algorithms for extracting information from problem-oriented texts on the example of government contracts

// Security Issues.

2023. № 3.

P. 1-10.

DOI: 10.25136/2409-7543.2023.3.43543 EDN: XNUXIB URL: https://en.nbpublish.com/library_read_article.php?id=43543

Algorithms for extracting information from problem-oriented texts on the example of government contracts

Nikitin Petr Vladimirovich

ORCID: 0000-0001-8866-5610

PhD in Pedagogy

Associate Professor, Department of Data Analysis and Machine Learning, Financial University under the Government of the Russian Federation

125993, Russia, Moscow, Leningradsky Prospekt, 49, office building 2

|

pvnikitin@fa.ru

|

|

|

Other publications by this author

|

|

Gorokhova Rimma Ivanovna

PhD in Pedagogy

Associate Professor, Department of Data Analysis and Machine Learning, Financial University under the Government of the Russian Federation

125167, Russia, Moscow, Leningradsky Prospekt, 49

|

rigorokhova@fa.ru

|

|

|

Other publications by this author

|

|

Bakhtina Elena Yur'evna

PhD in Physics and Mathematics

Associate Professor, Moscow Automobile and Road Engineering State Technical University (MADI)

125319, Russia, Moscow, Leningradsky Prospekt, 46

|

elbakh@gmail.com

|

|

|

Other publications by this author

|

|

Dolgov Vitalii Igorevich

PhD in Physics and Mathematics

Associate Professor, Department of Data Analysis and Machine Learning, Financial University under the Government of the Russian Federation

125319, Russia, Moscow, Leningradsky Prospekt str., 49

|

vidolgov@fa.ru

|

|

|

Other publications by this author

|

|

|

Korovin Dmitrii Igorevich

Doctor of Economics

Professor, Department of Data Analysis and Machine Learning, Financial University under the Government of the Russian Federation

125319, Russia, Moscow, Leningradsky Prospekt str., 49

|

dikorovin@fa.ru

|

|

|

Other publications by this author

|

|

|

DOI: 10.25136/2409-7543.2023.3.43543

EDN: XNUXIB

Received:

09-07-2023

Published:

17-09-2023

Abstract:

The research is aimed at solving the problem of the execution of government contracts, the importance of using unstructured information and possible methods of analysis to improve the control and management of this process. The execution of government contracts has a direct impact on the security of the country, its interests, economy and political stability. Proper execution of these contracts contributes to the protection of national interests and ensures the security of the country in every sense. The object of research is algorithms used to extract information from texts. These algorithms include machine learning technologies and natural language processing. They are able to automatically find and structure various entities and data from government contracts. The scientific novelty of this study is the accounting of unstructured information in the analysis of the execution of government contracts. The authors drew attention to the problem-oriented texts in the contract documentation and suggested analyzing them with numerical indicators to assess the current state of the contract. Thus, a contribution was made to the development of methods for analyzing government contracts by taking into account unstructured information. The proposed methods for analyzing problem-oriented texts using machine learning. This approach can significantly improve the evaluation and management of the execution of government contracts. The results of the interpretation of problem-oriented texts can be used to optimize the risk assessment model for the execution of a government contract, as well as to increase its accuracy and efficiency.

Keywords:

government contracts, contract execution, digitalization, problem-oriented text, numerical indicators, unstructured information, machine learning, text analysis, deep learning, neural networks

This article is automatically translated.

You can find original text of the article here.

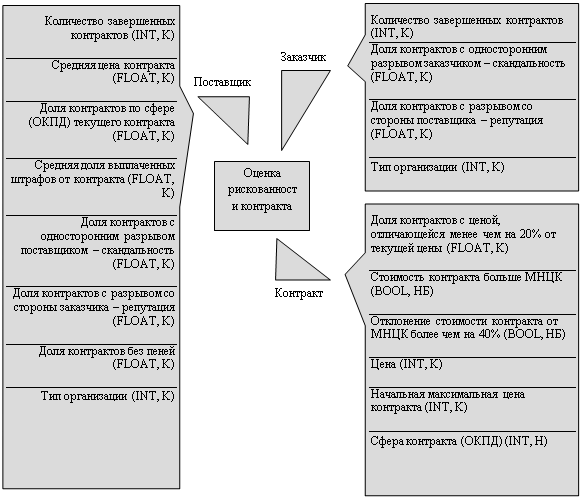

Introduction The system of state contracts was created to meet the needs of budgetary institutions. It allows departmental organizations to organize for themselves the supply of goods, the performance of works, the provision of services at the expense of budgetary funds and extra-budgetary funds. The modern problem of government orders is their feasibility: not all contractors are able to fulfill their obligations under the contract for various reasons. Digitalization has contributed to the transparency of the process of supporting government contracts: any interested person can get acquainted with all the details of the transaction between a budget institution and a future contractor on the state Internet portal. This allows you to control the targeted use of budget funds, which is no less important from the point of view of budget execution. As part of the execution of the federal law, performers fill out a lot of settlement, estimate and descriptive documentation, which is also freely available on the portal or websites for interaction between customers (government agencies) and performers (contractors, suppliers of goods or services). Such documents characterize the main parameters of any state order, and the text in such documents is problem-oriented. Problem-oriented texts (POT) are a special type of text data containing information about a specific problem or question. In addition, there are other sources of open and accessible unstructured information. The analysis of such sources allows us to obtain new numerical indicators to assess the current state of the contract. The problem of the execution of government contracts is considered by many experts and researchers from different scientific fields. Most often, this problem is taken into account by economists, since the study of the impact of budget funds on the real economy is relevant. As a rule, for the construction of mathematical and economic models, already known quantitative indicators are used, which are available on the open portal of public procurement. Thus, the researchers in their work [1] used all available numerical indicators (on the part of the customer, on the part of the supplier, as well as the parameters of the contract itself), which can affect the risks of non-fulfillment of contracts (shown in Figure 1). But the authors did not take into account the available unstructured information, which can also be expressed in numerical indicators. Therefore, it is necessary to pay special attention to the methods of SWEAT analysis using machine learning.

Figure 1. Formed numerical signs in the work [1]. There are a large number of scientific papers and software systems devoted to the use of various methods of analyzing unstructured information in various fields of activity: in civil aviation, in monitoring news and Internet sites, medicine, in text analysis of documents and information search in unstructured sources. The authors of the article [2] analyze the effectiveness of a computer model of language construction in its structuring and formalization, which contributes to the concretization of the meaning of oral and written text in working with large volumes of unstructured textual information. The article describes the basic concepts characterizing the syntactic relations of text messages in English, as well as the specifics of the approach to the analysis of syntactic structures of English-language texts. Using the example of a software product developed by the authors, the sequence of its algorithm implementation is analyzed. The paper [3] discusses the use of natural language processing methods for analyzing large volumes of clinically important information contained in unstructured form in electronic medical records. The article presents examples of successful application of some methods of natural language processing, as well as lists the main problems and limitations of their more effective use for the analysis of unstructured medical data. Articles [4-6] are devoted to the problem of automatic processing of unstructured documents. The volume of such documents is growing every year, and manual processing takes a lot of time and resources. To solve this problem, the general architecture of the information extraction system based on modern approaches of natural language processing and machine learning is presented. The presented solution allows you to process a large amount of unstructured information without writing code and preparing parsing templates. Taken together, these approaches to analyzing unstructured text take place when investigating the possibility of creating an additional indicator as part of assessing the risk of non-fulfillment of government contracts. The purpose of the study The purpose of this study is to study deep machine learning algorithms for analyzing textual information to form an additional indicator for evaluating the execution of government contracts. Material and methods of research In public procurement, standard types of documents are most often used, such as contracts, invoices, any cover letters and appendices. In other words, these are legal documents, and this is another example of POT. They contain legal terms, definitions and rules used to describe legislative procedures, legal processes, legal aspects of transactions, etc. They also contain valuable information about the parameters of the contract itself, which cannot be represented by a numerical indicator without preliminary processing. In particular, each contract has a section of the draft contract that contains the main parameters:

· Names and details of government agencies and suppliers. · Description of works, services or goods. · Dates and deadlines. · Amounts and terms of payment. · Technical specifications and requirements. · Provisions on guarantees and liability. · Sanctions and fines. · Details and signatures of the parties to the contract. Especially important among these characteristics are the description of works, services or goods, technical specifications and requirements, provisions on guarantees and liability, as well as sanctions and fines. Together, these parameters are the target for determining the complexity of the contract, which directly affects the quality of its execution by the contractor. The remaining parameters are no less valuable, but are most often presented as structured information on the public procurement platform itself. When analyzing problem-oriented texts, there are a number of difficulties: unstructured information does not have a clear structure and may contain a large amount of noise and insignificant data, a fairly large volume of raw texts. Traditional data analysis methods (relational databases and statistical methods) cannot process and extract information from such data. In the context of extracting information from the POT, two fundamental approaches can be considered, which are most often used by researchers and developers in solving this problem: manual definition of rules for text extraction and a statistical method using machine and deep learning algorithms. The advantage of manually drawing up rules is to control the extraction of information, high accuracy, but this method is applicable to small data sets. Therefore, to solve the problem of POT analysis, it is more effective to use natural language processing methods (NLP, text tonality determination), machine learning (ML, compilation of simple clustering and classification models of text data) and neural networks (NN). During the implementation of NLP processes, both standard algorithms and more complex word processing models may be required. Among these are tokenization of words, normalization of text, marking of parts of speech, lemmatization and stemming, removal of stop words, recognition of named entities. Simple methods can become the foundation for the implementation of complex text processing models, with the help of which numerical indicators and classes can be formulated. Such models can be called the tonality of the text, the definition of the subject of the text, the selection of the main, etc. At the stage of implementation of such models, the use of machine learning and deep learning models using neural networks should be considered. Previously, it was determined that the bulk of text data for government contracts are legal documents, respectively, it makes sense to consider models that are highly effective in analyzing legal POT. The main tool for extracting the entities of the state contract in this study will be the Natasha library [7]. The Natasha Library is a natural language processing tool specifically designed to extract entities from texts, including government contracts. The main task of Natasha is the automatic recognition and extraction of various entities from the text, including the names of organizations, positions, dates, amounts, deadlines and other important data that are usually contained in government contracts. It works on the basis of training models on large volumes of text data, which allows it to achieve high accuracy and completeness when extracting information from text. The library also provides additional functions, such as category search and text classification, which makes it useful for various natural language processing tasks related to government contracts. By applying these algorithms and downloading the documents of government contracts, we can extract entities that we will use as features in the training of machine learning models in the future. In our case, the essential features that are not present in the system of the IES of the State Budget are: provisions on guarantees and liability; sanctions and fines. The application of the described algorithms will complement the basic models for calculating the risk of execution of government contracts in order to increase the accuracy of their forecast and further use on an industrial scale. Research results and their discussion

As part of the current study, the main methods of extracting useful information from unstructured texts were proposed for consideration. In particular, it was found out that statistical methods of working with text seem to be the most effective for their further application when creating an additional indicator. It should also be taken into account that some methods are not applicable individually: for example, NLP methods allow you to pre-process raw texts, and using ML models on such data will allow you to classify them and determine numerical parameters for the formation of additional indicators. Thus, it is possible to build a formal algorithm for working with POT to obtain numerical characteristic indicators from it and the final indicator of the complexity of the contract. An example of such an algorithm is shown in Fig. 2.

Figure 2. Diagram of the algorithm for working with POT to obtain additional indicators. Thus, using this algorithm, we add new features to our final dataset that will allow us to track the execution of government contracts in more detail in machine learning models. In particular, the allocation of such entities as provisions on guarantees and liability, sanctions and fines allowed to increase the assessment of the quality of training models in the framework of government contracts compared with the first studies [8]. Conclusion Within the framework of the study, it was possible to study the main methods of working with problem-oriented texts. The methods of natural language processing, machine learning and deep learning are investigated in order to extract numerical indicators from textual information from government contracts. In the future, the current study will be taken as a basis for the implementation of an additional indication tool. The results of the interpretation of problem-oriented texts can be used to optimize the risk assessment model for the execution of a government contract, as well as to increase its accuracy and efficiency. For successful execution of government contracts, it is necessary to have accurate and timely forecasts of contract execution, which will reduce risks and improve the quality of this process.

References

1. Eliseev, D. A., & Romanov, D. A. (2018) Machine learning: forecasting public procurement risks. Open Systems. DBMS, 2, 42-44.

2. Uzkikh, G. Yu. (2023) Application of deep learning in natural language processing tasks. Bulletin of Science, 8(65), 310-312.

3. Serdyuk, Yu. P., Vlasova, N. A., & Momot S. R. (2023) System for extracting mentions of symptoms from natural language texts using neural networks. Software systems: theory and applications, 1(56), 95-123.

4. Kureychik, V. V., Rodzin, S. I., & Bova, V. V. (2022) Methods of deep learning for text processing in natural language. Izvestia of the Southern Federal University. Technical sciences, 2(226), 189-199.

5. Yezhkov, A. A. (2022) Analysis of research in the field of processing unstructured texts in medicine. Scientific review: topical issues of theory and practice, 23-26.

6. Proshina, M. V. (2022). Modern methods of natural language processing: neural networks. Economics of construction, 5, 27-42.

7. Tarabrin, M. A. (2022) The use of Natasha API tools in the development of an algorithm for depersonalization of text data. Actual issues of operation of security systems and protected telecommunication systems, 61-64.

8. Nikitin, P., Bakhtina, E., Korchagin, S., Korovin, D., Gorokhova, R., & Dolgov, V. (2023) Evaluation of the execution of government contracts in the field of energy by means of artificial intelligence. E3S Web of Conferences, 402, 03041. doi:10.1051/e3sconf/202340203041

Peer Review

Peer reviewers' evaluations remain confidential and are not disclosed to the public. Only external reviews, authorized for publication by the article's author(s), are made public. Typically, these final reviews are conducted after the manuscript's revision. Adhering to our double-blind review policy, the reviewer's identity is kept confidential.

The list of publisher reviewers can be found here.

The subject of the research of the reviewed article is algorithms for extracting information from problem-oriented texts. The chosen line of study is quite relevant and new. Government contracts become the basis of illustrations and practical material. As the author of the work notes, "the modern problem of government orders is their feasibility: not all contractors are able to fulfill their obligations under the contract for various reasons. Digitalization has contributed to the transparency of the process of supporting government contracts: any interested person can get acquainted with all the details of the transaction between a budget institution and a future contractor on the state Internet portal." In general, the concept of the work has been verified, the subject-object layer has been verified. The style is oriented towards the scientific type proper, terminological accuracy and versatility are noteworthy for research. For example, this is manifested in the following fragments: "as part of the execution of the federal law, performers fill out a lot of calculation, estimate and descriptive documentation, which is also freely available on the portal or sites for interaction between customers (government agencies) and performers (contractors, suppliers of goods or services). Such documents characterize the basic parameters of any government order, and the text in such documents is problem-oriented. Problem-oriented texts (POT) are a special type of text data containing information about a specific problem or question. In addition, there are other sources of open and accessible unstructured information. The analysis of such sources allows us to obtain new numerical indicators to assess the current state of the contract," or "there are a large number of scientific papers and software systems devoted to the use of various methods of analyzing unstructured information in diverse fields of activity: in civil aviation, in monitoring news and Internet sites, medicine, in text analysis of documents and information retrieval in unstructured sources", or "when analyzing problem-oriented texts, there are a number of difficulties: unstructured information does not have a clear structure and may contain a large amount of noise and insignificant data, a fairly large volume of raw texts. Traditional data analysis methods (relational databases and statistical methods) cannot process and extract information from such data. In the context of extracting information from the POT, two fundamental approaches can be considered, which are most often used by researchers and developers in solving this problem: manual definition of rules for text extraction and a statistical method using machine and deep learning algorithms," etc. The target component of the article is strictly marked, the methodological level is relevant. I note that the text has a syncretic character, the theoretical section is successfully combined with the actual practical one. The available text volume is enough to cover the topic, in my opinion, it is not necessary to expand the work particularly. I believe that the material can be useful to both prepared and untrained readers. Comments along the way are full-fledged: for example, "Natasha's main task is to automatically recognize and extract various entities from the text, including names of organizations, positions, dates, amounts, deadlines and other important data that are usually contained in government contracts. It works on the basis of training models on large amounts of text data, which allows it to achieve high accuracy and completeness when extracting information from text. The library also provides additional functions such as category search and text classification, which makes it useful for various natural language processing tasks related to government contracts," or "statistical methods of working with text seem to be the most effective for their further application when creating an additional indicator. It should also be noted that some methods are not applicable individually: for example, NLP methods allow you to pre-process raw texts, and using ML models on such data will allow you to classify them and determine numerical parameters for the formation of additional indicators. Thus, it is possible to build a formal algorithm for working with POT to obtain numerical characteristic indicators and a final indicator of the complexity of the contract from it," etc. The visual mode of systematization of the accumulated data is quite successfully correlated with the text part; diagrams, drawings are convenient for perception / reception. The conclusions of the text are somewhat formal, but they correspond to the main part. The prospect of using data has been successfully written, this is an indicator of scientific responsibility: "in the future, the current research will be taken as the basis for the implementation of an additional indication tool. The results of the interpretation of problem-oriented texts can be used to optimize the risk assessment model for the execution of a government contract, as well as to increase its accuracy and efficiency. For the successful execution of government contracts, it is necessary to have accurate and timely forecasts of contract execution, which will reduce risks and improve the quality of this process." The formal requirements of the publication are taken into account, the list of bibliographic sources contains works of different years and different types. I recommend the article "Algorithms for extracting information from problem-oriented texts using the example of government contracts" for open publication in the scientific journal "Security Issues".

Link to this article

You can simply select and copy link from below text field.

|

|