|

MAIN PAGE

> Back to contents

Philosophical Thought

Reference:

Zelenskii A.A., Gribkov A.A.

Actor modeling of real-time cognitive systems: ontological basis and software-mathematical implementation

// Philosophical Thought.

2024. № 1.

P. 1-12.

DOI: 10.25136/2409-8728.2024.1.69254 EDN: LVEGUM URL: https://en.nbpublish.com/library_read_article.php?id=69254

Actor modeling of real-time cognitive systems: ontological basis and software-mathematical implementation

Zelenskii Aleksandr Aleksandrovich

ORCID: 0000-0002-3464-538X

PhD in Technical Science

Leading researcher, Scientific and Production Complex "Technological Center"

124498, Russia, Moscow, Zelenograd, Shokin Square, 1, building 7

|

zelenskyaa@gmail.com

|

|

|

Other publications by this author

|

|

|

Gribkov Andrei Armovich

ORCID: 0000-0002-9734-105X

Doctor of Technical Science

Senior Researcher, Scientific and Production Complex "Technological Center"

124498, Russia, Moscow, Zelenograd, Shokin Square, 1, building 7

|

andarmo@yandex.ru

|

|

|

Other publications by this author

|

|

|

DOI: 10.25136/2409-8728.2024.1.69254

EDN: LVEGUM

Received:

07-12-2023

Published:

09-01-2024

Abstract:

The article is devoted to the study of the problem of increasing the reliability of modeling of cognitive systems, to which the authors refer not only human intelligence, but also artificial intelligence systems, as well as intelligent control systems for production, technological processes and complex equipment. It is shown that the use of cognitive systems for solving control problems causes very high rapidity requirements for them. These requirements combined with the necessity to simplify modeling methods as the modeling object becomes more complex determine the choice of an approach to modeling cognitive systems. Models should be based on the use of simple algorithms in the form of trend detection, correlation, as well as (for solving intellectual problems) on the use of algorithms based on the application of various patterns of forms and laws. In addition, the models should be decentralized. An adequate representation of decentralized systems formed from a large number of autonomous elements can be formed within the framework of agent-based models. For cognitive systems, two models are the most elaborated: actor and reactor models. Actor models of cognitive systems have two possible realizations: as an instrumental model or as a simulation. Both implementations have the right to exist, but the possibilities of realizing a reliable description when using the tool model are higher, because it provides incommensurably higher rapidity, and also assumes variability of the modeled reality. The actor model can be realized by means of a large number of existing programming languages. The solution to the problem of creating simulative actor models is available in most languages that work with actors. Realization of instrumental actor models requires rapidity, which is unattainable in imperative programming. In this case, the optimal solution is to use actor metaprogramming. Such programming is realizable in many existing languages.

Keywords:

cognitive system, control, reliability, rapidity, modeling, trends, patterns, decentralization, actor, metaprogramming

This article is automatically translated.

You can find original text of the article here.

Introduction The concepts of cognitive systems have undergone significant changes in recent decades. If more recently, when talking about the cognitive system, we assumed by default that we were talking about a human cognition system, at present, in the context of the rapid development of information technology, a significantly wider class of systems is referred to as cognitive. According to the currently relevant interpretation, the cognitive system (from Latin cognito — cognition, recognition, familiarization) is a multi–level system that performs the functions of recognizing and memorizing information, making decisions, storing, explaining, understanding and producing new knowledge [1]. Most of the key trends in global economic and technological development currently observed are related to the use of artificial cognitive systems. The most complex of them are cognitive artificial intelligence systems, less complex are cognitive control systems for complex technological equipment, technological processes and production facilities. The latter, in particular, include digital production systems [2]. The specificity of cognitive systems, due to their use for real-time control, determines the need for high performance, in contrast to the high performance inherent in modern computing systems. The concepts of speed and performance are quite close, but there are fundamental differences between them, giving rise to alternative approaches to their technological implementation. For control systems, the concepts of speed and performance are defined as follows [3]: – the speed of the control system is the inverse of the duration of the time interval (control cycle) required to perform a set of necessary operations (usually relatively small) for the current control. – the performance of the control system is defined as the number of elementary control operations per unit of time and is calculated as an average value for a relatively long time interval during which a large number of operations are performed. Modern computers, if used as a control system, have high performance but low performance. That is why they are not usually used to manage complex objects in real time. The insufficient performance of modern computers is due to their construction based on the von Neumann architecture, the main disadvantage of which is the sequential execution of operations (both computational and memory access operations). Currently, there is only one known way to increase the speed of management – using the parallelism of their execution, in general, decentralization of management. It is in this way that the problem of the speed of cognitive systems in nature is solved: decentralization of management is implemented both at the level of cells, organs or metabolic processes of an individual animal or human, and at the level of their suprasystems (human society, ant colony, bee family, pack of wolves, herd of zebras, etc.). In this article, the authors plan to identify approaches to building models of control objects that will have the required reliability and provide the necessary control performance. The subject of the study will also be the determination of the relationship of the models being formed to reality and the problems of their optimal software and mathematical implementation. The problem of reliable modeling of reality The fundamental problem of the theory of cognition, science and technology is the fundamental impossibility of ontological description of real objects, i.e. describing them as they are, regardless of cognition. The theory of knowledge, science and technology were created by people and are based on generalized concepts, a hierarchy of forms and laws, and a probabilistic description. Meanwhile, in the real world, everything is concrete, implemented here and now, and in general, hierarchical constructions of objects do not imply a reduction in the number of parameters defining them. As a result, discrepancies between being and models of being are inevitable. Overcoming this discrepancy and forming absolutely reliable models of real objects is impossible, but it is possible to make these models more adequate. Possible approaches to improving the adequacy of models can be determined based on the secondary laws of objects formulated within the framework of the empirical-metaphysical general theory of systems [4]. The following two secondary laws of objects are basic in the context of the problem under consideration: 1. The law of Sustainable Object modeling [5], according to which, as the completeness of the description of an object increases, modeling methods should be simplified in order to preserve the stability of the description. The law of stable modeling is an extension of the principle of incompatibility [6, p. 10], according to which the complexity of the system and the accuracy with which it can be analyzed are inversely related. 2. The law of achieving stability through the architecture of objects [7], according to which the achievement of the stability of an object can be achieved through the formation of an egressive connection in it (in particular through monocentrism), or by increasing the number of connections of elements within the object (in particular, through autonomization and decentralization).

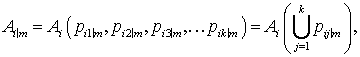

The tendency to simplify modeling methods in describing complex objects or to increase the completeness of the description is clearly manifested in the practice of simulation modeling, including those based on the use of numerical methods. If we cannot determine the relationship of the parameters of a complex analytical model within the framework of a single description, then instead of a generalized description, we turn to a local one that connects individual parameters in limited ranges of their values. An illustrative example is the simulation of the gravitational interaction of a system formed of three or more cosmic bodies. As is known, accurate analytical determination of movements (along elliptical trajectories) is possible only for binary systems. However, due to the use of simulation based on numerical methods, the trajectories of cosmic bodies in multicomponent systems (for example, in the Solar System) are calculated with high accuracy. Numerical modeling methods, including the simplest of them, the method of linear interpolation and extrapolation, are least based on knowledge about the internal laws and relationships of the simulated object, and therefore provide the greatest stability of the solutions obtained. As an illustration, let's consider the solution of the problem of interpolating a functional dependence by its given values (points). Let's compare two approaches to the solution corresponding to greater and lesser complexity of modeling methods: polynomial interpolation and linear interpolation. In the first case, the solution to the interpolation problem will be a function (a polynomial of the nth order) passing through all n given points. At the same time, the discrepancy between these points between the function and its trend can be very significant and the greater the more points (since an increase in the number of points leads to a complication of the modeling method). If linear interpolation is used, the resulting piecewise linear function will match the trend incomparably better, which means its predictive capabilities are higher. At the same time, an increase in the number of points does not lead to an increase in divergence from the trend, but to a decrease, since the modeling method does not become more complicated, and the distances between points decrease. In relation to the modeling of cognitive systems, this simplification of modeling methods involves the use of the simplest algorithms in data processing in the form of determining correlations and trends. Such methods are used in modern machine learning systems based on the use of big data technologies [8], which is the least complex implementation of artificial intelligence. In more complex cognitive systems, for example, related to human consciousness, along with correlations and trends, patterns (patterns of forms or relationships realized in different subject areas and at different levels of the universe) are also involved [9], which correspond to somewhat more complex modeling methods requiring a neural network with a large number and variety of elements and their connections. Methods based on the use of patterns cannot be called complex, however, it must be stated that the overall goal of simplifying modeling methods cannot be fully achieved. The previously mentioned law of achieving sustainability through the architecture of objects indicates two possible ways: centralization, or an increase in the number of connections of elements within an object through autonomy and decentralization. The research of the authors [3] related to ensuring the realizability of real-time control of complex objects (in particular, technological equipment) has shown that the centralization of the control system is incompatible with high performance. Therefore, the only way to increase the stability of the management system that meets the requirement of high performance (including real-time management) is its decentralization. Agent-based modeling of decentralized systems To adequately represent decentralized systems formed from a large number of elements interacting with each other and at the same time possessing autonomy, special simulation models are used, called agent-based models (ABM) [10]. The elements of such models are agents – unique and autonomous entities that interact locally with each other and with the environment [11, p. 10]. Agent-based models are applicable to represent decentralized systems of any nature: physical, social, economic, logistical, educational, as well as informational. In the context of this study, information systems, namely cognitive systems, are of interest to us. For cognitive systems, including artificial intelligence systems, two software and mathematical implementations of the agent-oriented model are the most developed: the actor model and the reactor model (relational actor model). Initially, the actor and reactor models served to solve the problem of implementing parallel computing, but now their functionality has significantly expanded. The actor model is based on a software and mathematical representation of the system in the form of actors - universal execution primitives endowed with specified properties and interacting through messaging with other actors, ensuring the functioning of the system together with them [12,13]. Actors can be virtual entities, or have a physical implementation in the form of a processor or other device. A generalized mathematical description of the actors in the actor model can be presented in the following form:  (1) (1) where  is the mth (current) value of the parameter of the jth parameter of the ith actor. is the mth (current) value of the parameter of the jth parameter of the ith actor.

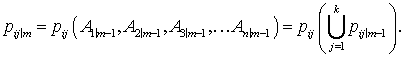

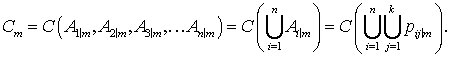

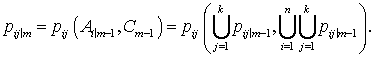

According to the representation given in formula (1), the state of an actor is a function of the values defining its parameters. The value of the parameter , in turn, is determined based on the previous states of all actors included in the actor model: , in turn, is determined based on the previous states of all actors included in the actor model:  (2) (2) One of the significant properties of actors is their ability to generate new actors. This property is implemented by removing the restriction from the n – maximum value of i. In the process of implementing the actor model, the number of n authors can either increase due to the birth of new actors (set by existing parent actors), or decrease (self-destruct or be destroyed by other actors, "die of old age" after working out a given number of activity cycles). In the relational actor model [14,15], management is carried out not by exchanging messages, as in the actor model, but by reacting to events. Each relational actor can react to the events of many different actors at any time. For relational actors, as well as for "ordinary" ones, birth and death are possible. A variant of the relational actor model is the continuum model [16]. The continuum model is based on the representation of the functioning of the system through the interaction of elements (actors) not with each other, but with some integral object (continuum) reflecting the properties of all elements (actors) significant for the functioning of the system, including events occurring with them. Each actor interacts only with the continuum, and the continuum interacts with all actors. The mathematical description of an actor as part of a continuum model, as in the case of an actor model, is determined by the values of its constituent parameters (see (1)). The description of the continuum state is based on data on the states of all actors included in the continuum model:  (3) (3) The value of the parameter , in turn, is determined based on the previous states of the actor to which this parameter refers, and the continuum: , in turn, is determined based on the previous states of the actor to which this parameter refers, and the continuum:  (4) (4) The analysis of expressions (2) and (4) indicates an inevitable delay in the representation of the system state (at least one step or control cycle). This means that a significant requirement for agent-oriented models (and in general, for simulation models of dynamic systems) is the high speed of their practical implementation. However, as will be shown, this requirement is not relevant in all cases. The information execution environment of the agent-oriented model As we have already noted, actor models are used to represent cognitive systems. The specificity of actor models, which are a special case of agent-oriented models, lies in the fact that they are exclusively informational, implemented by software, and also ensure the parallelism of operations (computational and others). Actors in the form of information objects exist and function in the information environment. Such a representation fits actor models into the context of the concept of consciousness developed by the authors, considered outside of connection with its most studied implementation in the form of human intelligence. According to this concept, consciousness is an information environment in which an expanded model of reality is realized, formed from information objects [17]. As a result, the actor model maximally corresponds to the task of describing cognitive systems, including artificial intelligence. Actor models of cognitive systems, like other simulation models, have two possible implementation options as an information model: in the form of an instrumental model, or in the form of a simulative (virtual) model. The instrumental actor model of the cognitive system determines the organization of information transfer between actors, each of which is a representation in the information environment of the properties of a certain real object. Examples of such real objects are the functional modules of the control system: the core, computing modules, regulators of actuators, sensing modules, a neural network (which can be reflected by either a single actor or a complex system with a nested actor model), sensors, etc. [18].

The function of a simulated (virtual) actor model of a cognitive system is not to control this system (including in real time), but to study it. When forming a simulation model of a cognitive system, the task of increasing its performance is not solved. Insufficient performance can always be offset at the stage of analyzing simulation results (for example, due to time scaling). As a result, even a fairly complex simulation model can be implemented on a computer or other computing system with the von Neumann architecture (with sequential execution of operations). Actors in the simulated actor model are certainly information objects, but at the same time they differ significantly from actors in the instrumental model. This difference lies in the fact that, as we have already said, the actors in the instrumental model are representations of the properties of real objects, whereas the actors in the simulation model are ideal carriers of the parameters set by the researcher during the simulation. At the same time, the simulation model cannot be considered completely deterministic. When artificial neural networks are used for its implementation, variability of modeling outcomes (in particular, calculations) appears in it. However, this variability is related to the interpretation of data, their processing, and not to the variability of the data itself, i.e., the variability of the simulated reality due to the existence of a large number of relationships and dependencies that are not accounted for in the model. Is it possible to use simulated actor models for cognitive systems functioning in real time? Despite the inevitable decrease in the reliability of the results of the cognitive system, such use may be justified under certain conditions. There are only two conditions: sufficient completeness of the data must be provided (for the purpose of modeling), as well as the speed of their processing must be high enough. The assessment of the sufficiency of data for modeling is solved in each individual case and verified in practical use. As for the speed of data processing, the construction of simulated cognitive systems (cognitive systems using simulated actor models) capable of controlling complex systems (digital production, complex multi-coordinate machines, industrial robots, etc.) requires technologies that are currently in the process of becoming. Such technologies, in particular, include quantum computing [19] — solving problems by manipulating quantum objects: atoms, molecules, photons, electrons and specially created macrostructures. Actor metaprogramming The actor model has historically been formed as a mathematical model of parallel computing implemented by software. Actor-oriented languages ABCL (Actor-Based Concurrent Language), ActorScript, AmbientTalk, etc. were created specifically for programming actor models. Some functional languages [20] (Erlang, Scala, Elixir, etc.) have the ability to use actors built in. When using special extension libraries, many general-purpose object-oriented imperative languages can work with actors: C++, C#, Java, Python, Ruby, etc. All of these programming languages that provide the implementation of actor models are more or less relevant to solving the tasks of simulating cognitive systems, however, in most cases they are unsuitable for imperative programmatic representation of instrumental actor models of cognitive systems due to insufficient performance of programs based on them (at the current level of electronic component base), as well as channel congestion data transmission, especially in the case of imperative programming. According to the authors, the optimal approach to the software implementation of instrumental actor models of cognitive systems should be based on the use of metaprogramming [21]. In metaprogramming, the actor is not a primitive (as in actor-oriented languages) or a class (as in object-oriented programming), but instances of individual programs that are emulated by a dispatcher program or previously emulated instances of programs [22,23]. Metaprogramming is implemented by means of many existing languages: C++, C#, Java, Python, Ruby, etc. The specificity of actor metaprogramming, which determines its effectiveness for instrumental models of cognitive systems, is that each actor (in the form of a separate program) is a reflection in the execution environment (information environment) of the properties of a separate real object – an element of the cognitive system that can function autonomously, including having its own memory, which radically reduces the volume of data transmission over communication channels eliminates queues and increases the speed of the cognitive system. Thus, actor metaprogramming is naturally consistent with the implementation of the memory-centric architecture of the cognitive system [24]. Conclusions Summarizing the reflections collected in this article, the following key conclusions can be formulated: 1. The concept of cognitive systems is undergoing changes. Currently, they are not necessarily associated with human intellectual activity, but can be attributed to various technical systems, including artificial intelligence systems, as well as automatic control systems for technological processes, production facilities, and complex technological equipment. 2. Cognitive systems are widely used to solve real-time management problems. This makes it necessary to increase, along with performance, also their performance. This task is solved on the basis of the parallelism of operations, in general, due to the decentralization of management.

3. The key directions for increasing the reliability of reality modeling, including those implemented by means of cognitive systems, are to reduce the complexity of modeling methods as the complexity of the description increases (the complexity of the object or the completeness of its representation), as well as improving the architecture of this model. Previous research by the authors has shown that an architecture is combined with the requirement of speed, assuming an increase in the number of connections of elements corresponding to decentralization. 4. In relation to the modeling of cognitive systems, increasing the reliability of reality modeling is achieved through the use of the simplest algorithms in the form of determining correlations, trends implemented by artificial neural networks already at the present time, as well as several more complex algorithms based on the use of patterns. At the same time, the architecture of real-time cognitive systems should be decentralized. 5. An adequate representation of decentralized systems formed from a large number of autonomous elements can be formed within the framework of agent-oriented models. For cognitive systems, two software and mathematical implementations of the agent-oriented model are the most developed: actor and reactor. A variant of the reactor model implementation is a continuum model, which is based on the representation of the functioning of the system through the interaction of actors not with each other, but with a continuum – some integral object reflecting the properties of all actors that are significant for the system, for example, events occurring with them. 6. Actor models of cognitive systems have two possible implementation options: in the form of an instrumental model, or in the form of a simulation (virtual model). Both implementations have the right to exist, however, the possibilities of implementing a reliable description when using an instrumental model are higher, since it provides (at the current level of technology) disproportionately higher performance, and also assumes variability of the simulated reality due to the incompleteness of the proposed model. 7. The actor model can be implemented by means of a large number of existing programming languages, both special actor-oriented, functional, and general-purpose languages. The solution to the problem of creating simulated actor models is available in most languages working with actors. The implementation of instrumental actor models requires performance that is unattainable with imperative programming. In this case, the optimal solution is to use actor metaprogramming. In many existing languages (C++, C#, Java, Python, Ruby, etc.), such programming is feasible.

References

1. Philosophy: Encyclopedic Dictionary. (2004). Edited by A.A. Ivin. Moscow: Gardariki.

2. Mikryukov, A.A. (2018). Cognitive technologies in decision support systems in the digital economy. Innovations and Investments, 6, 127-131.

3. Zelenskiy, A.A., & Gribkov, A.A. (2023). Ontological aspects of the problem of realizability of management of complex systems. Philosophical Thought, 12, 21-31.

4. Gribkov, A.A. (2023). Empirical-metaphysical approach to the construction of the general theory of systems. Society: Philosophy, History, Culture, 4, 14-21.

5. Gribkov, A.A. (2023). Definition of secondary laws and properties of objects in the general theory of systems. Part 1. Methodological Approach on the Basis of Object Classification. Context and Reflexion: Philosophy about the World and Man, 12(5-6A), 17-30.

6. Zadeh, L.A. (1976). The concept of linguistic variable and its application to approximate decision making. Moscow: Mir.

7. Gribkov, A.A. (2023). Definition of secondary laws and properties of objects in the general theory of systems. Part 2. Methodological Approach Based on the Classification of Patterns. Context and Reflexion: Philosophy about the World and Man, 12(9A), 5-15.

8. Malyavkina, L.I., Dumchina, O.A., & Savvina, E.V. (2021). Methods and Techniques of Big Data Analysis. Infrastructure of Digital Development of Education and Business: Proceedings of the National Scientific and Practical Conference, Orel, April 01-30, 2021. Oryol: Oryol State University of Economics and Trade, 34-39.

9. Duin, R.P.W. (2021). The Origin of Patterns. Frontiers in Computer Science, November 2021. Vol. 3, article 747195.

10. Burilina, M.A., & Akhmadeev, B.A. (2016). Analysis of the diversity of architectures and methods of modeling decentralized systems based on agent-based approach. Creative Economy, 10(7), 829-848.

11. Railsback, S.F., & Grimm, V. (2019). Agent-Based and Individual-Based Modeling: A Practical Introduction. Second Edition. Princeton University Press.

12. Burgin, M. (2017). Systems, Actors and Agents: Operation in a multicomponent environment. Retrieved from arXiv:1711.08319

13. Rinaldi, L., Torquati, M., Mencagli, G., Danelutto, M., & Menga, T. (2019). Accelerating Actor-based Applications with Parallel Patterns. 27th Euromicro International Conference on Parallel, Distributed and Network-Based Processing, 140-147.

14. Shah, V., & Vaz Salles, M.A. (2018). Reactors: A case for predictable, virtualized actor database systems. International Conference on Management of Data, 259-274.

15. Lohstroh, M., Menard, S., Bateni, S., & Lee, E. (2021). Toward a Lingua Franca for Deterministic Concurrent Systems. ACM Transactions on Embedded Computing Systems, 20(4), 1-27.

16. Khoroshevskiy, V.G. (2010). Distributed computing systems with programmable structure. Vestnik SibGUTI, 2, 3-41.

17. Gribkov, A.A., & Zelenskiy, A.A. (2023). Definition of consciousness, self-consciousness and subjectness within the information concept. Philosophy and Culture, 12, 1-14.

18. Zelenskiy, A.A., Ilyukhin, Y.V., & Gribkov, A.A. (2021). Memory-centered models of the motion control systems for the industrial robots. Bulletin of Moscow Aviation Institute, 28(4), 245-256.

19. Fedorov, A. (2019). Quantum computing: from science to applications. Open Systems. SUBD, 3, 14.

20. Batko, P., & Kuta, M. (2018). Actor model of Anemone functional language. The Journal of Supercomputing, 74, 1485-1496.

21. Skripkin, S.K. Vorozhtsova, T.N. (2006). Modern methods of metaprogramming and their distributed systems of perspective development technology. Vestnik IrSTU, 2(26), 90-97.

22. Neuendorffer, S. (2004). Actor-Oriented Metaprogramming. PhD Thesis, University of California, Berkeley, December 21. Retrieved from https://ptolemy.berkeley.edu/publications/papers/04/StevesThesis/

23. Zelenskiy, A.A., Ivanovskiy, S.P., Ilyukhin, Yu.V., & Gribkov, A.A. (2022). Programming of the trusted memory-centered motion control system of the robotic and mechatronic systems. Bulletin of Moscow Aviation Institute, 29(4), 197-210.

24. Kalyaev, I., & Zaborovskiy, V. (2019). Artificial intelligence: from metaphor to technical solutions. Control Engineering Russia, 5(83), 26-31.

Peer Review

Peer reviewers' evaluations remain confidential and are not disclosed to the public. Only external reviews, authorized for publication by the article's author(s), are made public. Typically, these final reviews are conducted after the manuscript's revision. Adhering to our double-blind review policy, the reviewer's identity is kept confidential.

The list of publisher reviewers can be found here.

The reviewed article is a professional study of the problems of using artificial cognitive systems to improve the efficiency of managing various technological processes in real time. The authors draw attention to the difference between "performance" and "speed" as characteristics of cognitive systems due to the different organization of operations – "sequential" or "parallel". It is only in the case of the introduction of parallel operations that it is possible to "decentralize" management and achieve a high degree of performance necessary for the effective use of artificial cognitive systems. It seems, however, that the term "decentralization" (which, judging by the reviewed article, is fundamentally important for this field of research) is not entirely successful, since it causes negative connotations. As a more acceptable one, any arbitrary expression introduced into scientific circulation together with a (as accurate as possible) definition can be used (a classic example is the introduction of the term "quark" into scientific circulation). The point is that it is not necessary to transmit the control signal "vertically" if the self-organization of the system can be carried out "at the horizontal level". At the same time, the authors talk about the need to "reduce the complexity of modeling methods as the complexity of the description increases (the complexity of the object or the completeness of its representation), as well as improve the architecture of this model." We repeat that the "dubious" "decentralization" is not necessary to convey this meaning. Further, the authors consider two ways of implementing the "agent-oriented model" – "actor" and "reactor": "A variant of the reactor model is a continuum model, which is based on the representation of the functioning of the system through the interaction of actors not with each other, but with a continuum – some integral object reflecting the properties of all actors that are significant for the system". In turn, actor models of cognitive systems can be implemented in the form of an instrumental or virtual (simulation) model. According to the authors, the possibilities of "reliable description when using an instrumental model are higher, since it provides ... higher performance, and also assumes variability of the simulated reality due to the incompleteness of the assumed model." And the use of programming methods already available today, according to the authors, makes it possible to solve these problems. Familiarity with the article allows us to state that it can be published in a scientific journal, however, there are some doubts about the expediency of publishing it in a philosophical journal. It seems that the authors could add additions to the text in a working order, demonstrating the relevance of their results for other, for example, social systems in which an insufficiently high level of speed may also act as an obstacle to improving management efficiency (in "authoritatively" organized systems in which all decisions are made by a single subject or require it legalization). Actually, this proposal is "on the surface", perhaps the authors could illustrate the importance of their work in related fields with other examples. It is also necessary to correct some punctuation errors ("summarizing the reflections collected in this article, it is possible...", etc.), as well as typos ("presentation of the functioning ("functioning, – reviewer) of the system", etc.). However, the comments made are secondary in comparison with the advantages of the reviewed article, I recommend accepting it for publication.

Link to this article

You can simply select and copy link from below text field.

|

|